The transformer architecture uses an encoder to compress an input sequence into a fixed size vector capturing the characteristics of the sequence. Transformer Deep-Learning Architectureįor the anomaly detection we employ the popular transformer architecture that has been successful especially in the field of natural language processing and excels especially in transforming sequences. In this paper we demonstrate how the transformer architecture can be deployed for anomaly detection. The transformer architecture leverages several concepts (such as encoder/decoder, positional encoding and attention), which enables the respective models to efficiently cope with complex relationships of variables especially with long-ranging temporal dependencies (e.g. A type of architecture which is the base for many current state-of-the-art language models is the transformer architecture. In the last years, major improvements have been made on using deep-learning techniques for NLP, which resulted in models that are, for example, translate text in a way nearly indistinguishable from human translations. This is not much of a surprise as text is also a form of sequential data with many complex interdependencies. These types of models are similar to models that have been used in text-analysis and natural language processing (NLP). Long-Short-Term-Memory or Gated-Recurrent-Unit) are applied for this task. Typically, some form of sequential model (e.g. Recently, deep-learning techniques are applied to the detection of anomalies as well. There are various established techniques to detect anomalies in time-series data, for example, dimension reduction with principal component analysis. By detecting anomalies automatically, we can identify problems in a system and can take actions before a larger damage is inflicted.

For example, because they have been collected from a machine suffering a degradation. Anomalies are parts of such a time-series which are considered as not normal.

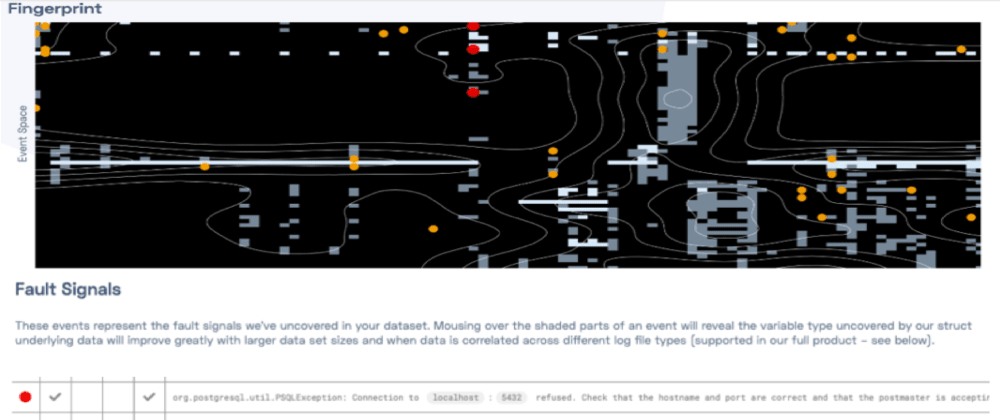

#Anomaly detection machine learning software#

Often, we are dealing with time-dependent or at least sequential data, originating, for example, from logs of a software or sensor values of a machine or a physical process. Therefore, that CLT-based anomaly detection was necessary mainly for the first two cases.Anomaly-Detection with Transformers Machine Learning Architecture Anomaly DetectionĪnomaly Detection refers to the problem of finding anomalies in (usually) large datasets. In my case, the data processing pipeline wasn’t definitive yet and even if the model’s re-training frequency had already been inferred, I wanted to monitor it on current data. Anomalous does not necessarily mean incoherent. For instance, if there is an economic crisis, most observations will have a sharp increase in their default score because their health has declined. Handling anomaliesĪnomalies can happen, among multiple reasons, because : - There is an anomaly in the data processing pipeline - The model is unstable or has to be re-trained - There is an external factor. A previous data analysis suggested that the model only had to be re-trained every year, but this anomaly analysis suggests that this should rather happen after 4 months. We can see from both the histogram and the line plot that the model shows signs of degradation after June 2021. Here, I kept a copy of my model and didn’t train it at all for several months.

0 kommentar(er)

0 kommentar(er)